Is It Time to Retire Minimum System Requirements?

In order to read this article, you will need a 64-bit quad core CPU with SSE4.2 instruction support, 8GB RAM, DirectX compatible graphics card and a HTML-capable web browser. Is this useless? I agree

By: Hernán Quijano

This problem is not new

Imagine you are eager to start your project. Let’s use a Media & Entertainment workflow like 3D Modeling & Animation (although you could apply this to any other workflow), you or your company have invested thousands of Dollars into a 3D application like Autodesk 3ds Max or Maya, you install it on a Workstation that your I. T. department gave you and everything seems to be on to a great start!

You start placing a few low polygon primitives and the instant you start adding a little complexity to your 3D models or modifiers, your system starts choking and stalling, in a way that lets you keep going but becoming more annoying and making you less productive by the minute.

Let me illustrate this with a picture:

This happened to me in the 90s, I was in High School, I bought my first software application: 3D Studio R4 for D.O.S. I was so frustrated; I worked summers and holidays to save US$1,500 to by the student license (yeah back then students had to pay at least 50% of MSRP). Why I was able to model and animate cubes, but the second I made a sphere or a teapot, by poor 486DX 50 MHz couldn’t handle it?

I wanted to become a 3D Animator; instead, I went deep into performance tuning — a multi-decade journey to understand the performance impact of every component inside my PC. That journey led me to become an Electronic Engineer at Intel, instead of the Animator at Pixar that I had originally envisioned. By the way…

Happy 30-year anniversary: Toy Story! The technical and artistic majesty achieved in 1995 is indistinguishable from magic! I’m sure Arthur C. Clarke agrees with me

Back to the main story… Before I ordered the software, I had done my homework, after all, this was a huge financial investment, even by today’s prices. I had talked to the experts and my system met the needed requirements… The Minimum System Requirements (MSR)!

You might say: “Nice anecdote from 30+ years ago, we are in 2025, computer are orders of magnitude faster. Things have to be different today, right? … right?” Wrong. The complexity of the software has also increased, so when it comes to MSRs, we have not made a lot of progress.

The Problem with Minimum System Requirements

For decades, Software Vendors (ISV) have created Minimum System Requirements (MSR) to establish a minimum baseline to install and operate an application. The problem is that MSRs are not helpful — they are actively holding the industry back: Users, I. T. departments, PC OEMs and the ISVs themselves.

Almost any computer is good to start a project, but users need a system that will empower them all the way to the finish line!

Let me illustrate my point:

User’s experience with the application falls off a cliff the second their complexity increases from, you guessed it, the bare minimum. Creativity and experimentation die when users are afraid of suffocating their machine.

I. T. departments need guidance to empower their users with computing tools that are capable of delivering the level of project complexity the company needs. They buy hardware based on MSRs and when the power user asks for more, you can see a sign coming out of their head that says one or many of the following: “Do you think you know more than the ISV? you don’t need more, you just want a fancier computer. Are you aware we are budget constrained?” and alike.

The PC OEMs (Dell, HP, Lenovo, Puget Systems, BOXX, etc) and IHVs (Intel, AMD, Nvidia, Apple) test as much as they can, run some of the few benchmarks out there, but they are not experts at the applications, so there is a lot of subjective generational knowledge that gets used to position hardware solutions. The reality is that PC OEMs don’t have the deep application-level expertise and in many cases, lack the executive support to do in-house pathfinding to discover workloads from the latest workflows. Believe me, I tried for years.

Finally, the ISVs, who set the lowest bar (MSRs) with the incentive of claiming that their application runs efficiently on very humble hardware, which sounds good, but it misguides users to feel the computer they already have is enough, while in reality, the latest version of the software is more capable, and therefore complex and computationally demanding. Bottom line, users get a subpar experience with the software.

Vague casts the wider net

To make things worse, MSRs have remained so vague that it lacks value and most importantly have not successfully provided a System Recommendation for an Optimal User Experience.

Let’s go through some examples from multiple ISVs’ MSRs:

CPUs

64-bit Intel® or AMD® with SSE4.2 instruction set

For macOS, Apple Silicon arm64 or Intel x86x86-64 processor, such as Intel Core 2 Duo or later

RAM Memory

8 GB of RAM (16 GB or more recommended)

At least 8 GB RAM

32 GB or higher recommended and 64GB strongly recommended for fluid simulations

Other

3 Button mouse required

When it comes to GPUs, the MSRs are typically a list of Minimum VRAM, OpenGL, OpenCL and driver versions

But wait, some ISV’s also have “Recommended System Requirements”, aren’t those useful? Not really, or at least I haven’t seen a really good one. They are just “a little bit higher than the MSRs”. For example: Minimum: 4-core CPU, Recommended: 6-core CPU. Baseless and not even considering that there is a lot of performance difference across CPU generations & vendors, even at the same core count and frequency.

Running any application on a System with Minimum System Requirements is like racing on Formula 1 or Nascar and showing up on an old hand-me-down Toyota Corolla your Grandparents gave you, because the requirements were to drive a car with 4 tires, 1 engine & 1 steering wheel! It doesn’t matter if you are a good or a great driver, you won’t be able to show what you can do and will likely hurt your brand and put the project at risk in the process

I particularly like the call above that implies that an “Intel Core 2 Duo or later” is what you need to get started. I was there for the launch of that CPU in 2006! 19 years ago. Would you dare to run the latest version of the most popular VFX compositing application on a 2006 system? I rest my case!

Industry strengths and weaknesses

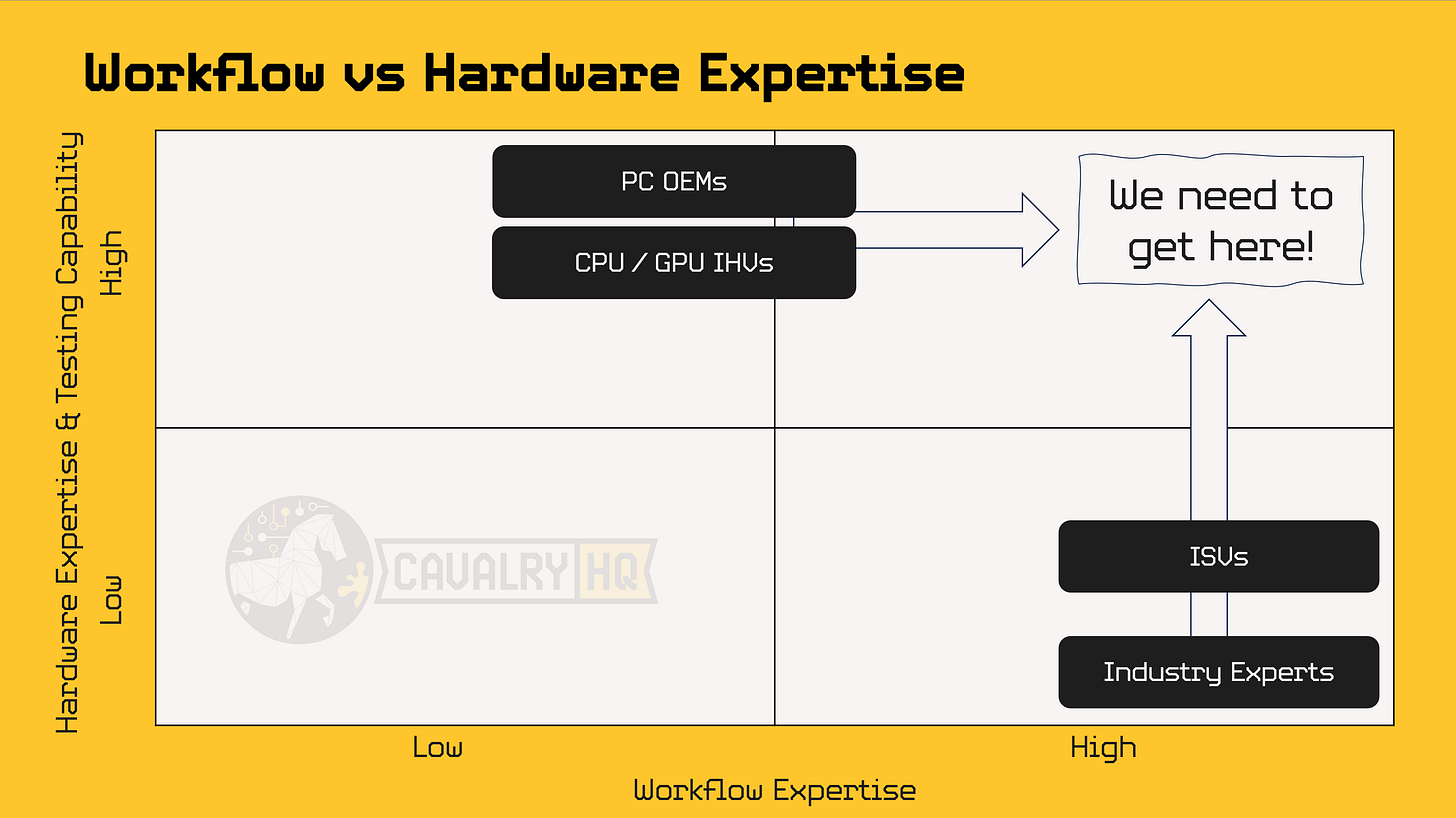

Let’s talk about the areas of expertise for each type of industry player:

Note: I know there are exceptions, this is just a high-level summary of that I have seen in the industry.

PC OEMs & CPU/GPU IHVs (Grouping both due to similarities)

Strengths

Hardware expertise

PC OEMs: Systems Level

CPU/GPU IHVs: Component Level

Strong testing & hardware validation capabilities

Weaknesses

Software Expertise. Application specific know-how

Access to Industry Experts mostly from marketing teams

ISVs

Strengths

Software application specific expertise

Deeper Partnerships with Industry Experts (power users)

Deep Workflow understanding

Weaknesses

Hardware testing and validation for minor CPU/GPU/Memory SKUs

Most HW Certification process are costly, time consuming and focus on functionality rather than performance

Industry Experts

Strengths

High expertise in Software applications and workflows (how they perform their craft)

Knowledge of application pain points

SW application experience to define low-to-high complexity workloads

Great at doing one-off experiments with their project. i.e.:

Is their PC good enough to get started? how does it “feel”?

Which application features should they avoid due to fear of lack of compute?

Weaknesses

Technical / computer knowledge is not their top area of expertise, their craft is

Limited access to multiple hardware SKUs

Finally, is not the user’s job to figure this out, but they could contribute a lot, so the industry should partner with them

Let me try to summarize this with a chart:

I applaud this FAQ developed by Lenovo about System Requirements, it touches on a lot of the points we cover today:

https://www.lenovo.com/us/en/glossary/system-requirements

Key players: Benchmarking Committees & Developers

Benchmarks are super important, they make it easy for the Hardware industry to run, however, not all benchmarks are created equal! There are synthetic benchmarks that compute random workloads for the sake of stressing the hardware and more often than not, they DO NOT correlate with the Real-World performance of the applications we care about. Therefore, I will pass-that-mark! (pun intended)

Let’s talk benchmarks based on Real-World workloads. The first think I must mention… Developing and maintaining a Benchmark is hard work and I want to applaud the effort of a couple of them that, in my professional opinion, are doing an excellent job!

SPEC/GWPG: Short for The Standard Performance Evaluation Corporation, a non-profit corporation, establishes and maintains standardized, vendor-agnostic benchmarks and tools to evaluate performance and energy efficiency for the newest generations of computing systems.

The SPEC group we care the most about for our industries is the Graphics and Workstation Performance Group (GWPG) develops a range of graphics and workstation benchmarks and performance reporting procedures. This committee is formed by members from participating companies like: Dell, HP, Lenovo, AMD, Intel & Nvidia. It’s important to recognize the companies that support this effort and the members that work on it beyond their daily job.

GWPG produces 3 categories of benchmarks:

SPECviewperf: Focused on measuring Graphics Performance. This means that the workloads are selected to disregard other system dependencies and focus on what happens in the GPU. Important, yet very specific. GPUs should be selected based on it, but it is not complete picture for complete systems’ selection

SPECapc: This family of benchmarks is specific to each application. It combines multiple CPU, GPU and Storage bound workloads for Maya, SolidWorks, Creo, among others

SPECworkstation: This is the most broad and versatile benchmark, and it is great for system-level selection. It includes workloads for CPU, GPU and storage for multiple industries and applications. In my opinion, this is the most important of them all for PC OEMs, component IHVs and I.T. departments to select systems. They can always look at the Industry sub-score that is relevant to them including: AI & Machine Learning, Energy, Financial Services, Life Sciences, Media & Entertainment, Product Design, and Productivity & Development.

For more details, visit: https://gwpg.spec.org/about-gwpg/

Puget Systems - PugetBench: Puget Systems is a computer OEM, very well regarded in the industry, not only because of their high-quality Workstations, but also because their capacity to give Objective (please read: data-based) recommendations. Like the rest of the industry, Puget Systems ran into the issue of how difficult it is to translate technical computer specifications into value for each workflow. The great differentiator is that Puget Systems decided to be proactive and do a lot to answer the hardest question: “So what?”

This workstation has many cores! So what?

This workstation has the latest GPU! So what?

What is the impact that each component and system bring to the user?

Just to give a few examples, for more information, visit: https://www.pugetsystems.com/pugetbench/

The common need that all benchmark developers have is simple, yet very complex: good quality workloads! They are the “thing”, the process, the application feature, you are trying to measure. After you have a good workload, there is work to do on automation, packaging, reporting, but without a good workload, you have…

ISV Benchmarking Initiative Award

If CavalryHQ had awards, it would go to: drum roll please…

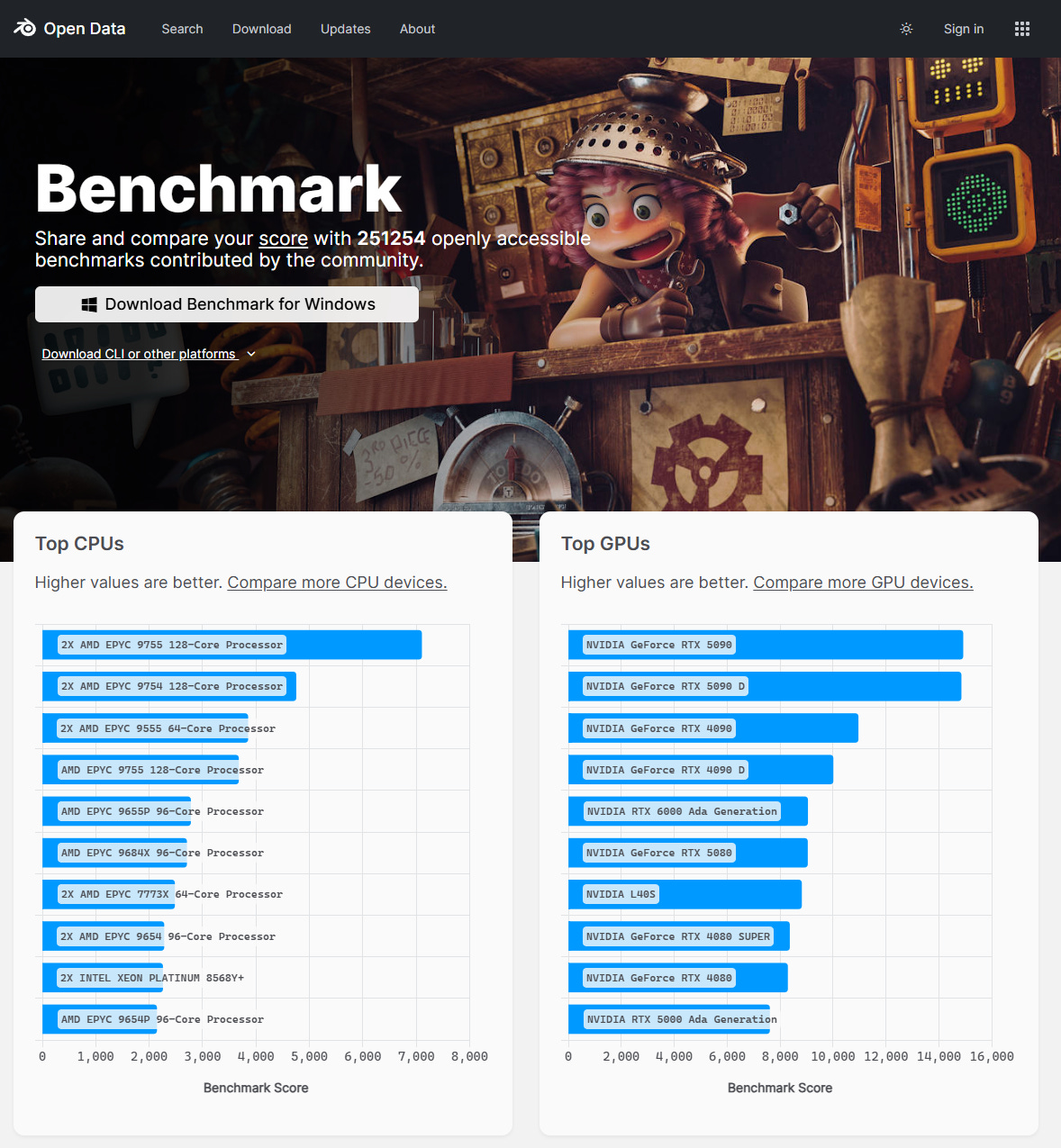

Blender Benchmark

The Blender Foundation has been a role model to the industry, when it comes to helping the industry recommend hardware to make Blender fly! They provide a downloadable Benchmark, ready to run from Command-Line (CLI), which is very important for automation (remember, lab technicians don’t and should not need to be Blender experts to measure its performance). It also includes sub-scores for CPUs and GPUs. Could it be better? absolutely, I wish it includes other workloads not only related to rendering, but focused on Loading Times, Geometry Nodes and many other things that the user will do in front of the computer. Having said that, I with every ISV would have something like this.

This is how it looks like:

For more information, go to:

https://opendata.blender.org/

There are other efforts where ISVs collaborate with Committees to develop workloads, but they are the exception, not the norm.

There has to be a better way!

It’s easy to complain about a problem — the value is in offering a potential solution!

This article is the first of a series, with the goal to provide a proposal for a solution, or at least a big step forward in the right direction. Like any effort worth doing, it requires collaboration from all players mentioned.

This is the proposed solution:

ISVs

For your top applications, make available multiple High-Quality workloads*. Give permission to Benchmarking Committees to integrate them into their suites. Clarify that MSRs are barely ok to install the application and the level of complexity of the project should define the recommended hardware. Remove licensing barriers so anyone can run the benchmark

PC OEMs and CPU/GPU IHVs

When launching Workstations, CPUs or GPUs, use the performance data from such workloads ran at entry-level, mid-end and high-end systems or components so show the end users the impact of using different levels of hardware at corresponding price levels.

Industry Experts

Donate projects, classify them into low, medium, high or extreme level of complexity and why (model, textures, particle simulations, etc). Remember, nobody knows more than you and your pain points. Have a balance across your workflow, have workloads for Import, Edit and Export type of operations. Provide instructions to reproduce it. Bottom line, become part of the solution by participating at the committees, for example: https://gwpg.spec.org/participation/ or https://www.pugetsystems.com/pugetbench/development-program/

Benchmarking Committees

Continue to be clear about the help you need! great job at the websites I just mentioned! Remove barriers so Tech Press and Influencers can run them

* High-Quality Workload: I will likely expand on this in the future, but for now, let’s just say that a High-Quality workload is: repeatable, no-license required, has instructions for reproduction by a non-expert user of the application, covers different parts of the workflow (i.e. import/load, edit, export), includes variation toggles for low, mid, high and extreme complexity. Bonus: can be run from a command-line (CLI), if not, automation could be achieved from a trace capture

Conclusions

We need to work together as an industry, no single entity or player can create great solutions

Minimum System Requirements are just not useful, they are barely a recipe to avoid installation issues

If power users, ISVs, PC OEMs and CPU/GPU/Storage/Memory ISVs support Benchmarking committees, WE ALL WIN! Better value-based guidance for users, better user experience with key ISV applications, better sales for hardware and invaluable feedback for future silicon and less subjectivity in the selection process.

Do you want to help but don’t know where to start?

Need an introduction to any of the key industry players?

Please reach out!