The Benchmarking Blind Spot: Getting Real Performance Answers for Your Professional Workflow

Most benchmarks miss the mark. Learn how to objectively measure what matters for your workflow and make informed hardware decisions

Many articles and reviewers do their best, yet they get it wrong

A new Workstation is out, a new CPU is launched, a new GPU arrives; we can’t wait to see what it can do and we rush to read the reviews. There is a lot of commentary around specs, core counts, frequencies, bits and bytes, then comes the hardest question: “So what?”

What can all that new technology do for my software application and therefore my productivity, quality of my work, income, etc? There has to be a way to objectively measure performance so we can understand the benefits of new technology right? right?

Well, of course! that’s what benchmarks are for, Maxon Cinebench1 or Blender Benchmark2 there are plenty of them and most of the time, reviewers are not experts on most software applications, so they trust that the Software Vendor (ISV) and its benchmark will properly guide them. I mean, why wouldn’t they. Note: I’m ignoring synthetic benchmarks (i.e. Passmark) on purpose because they are even more misleading!

Even if some of these benchmarks are based on real parts of applications, there is one problem, they should be taken in context, since they are not a good proxy for the application performance as a whole. They are a good proxy for a very specific set of workloads in such application; in the 3D world, it’s typically Render Time! This is the case for Blender Benchmark and Cinebench. So if a Workstation can render fast, it’s the right machine for you? Not so fast (pun intended)

Typically, a CPU with many cores will be good for Rendering, after all it is an embarrassingly parallel3 workload, where a frame can be splitted in tiles or multiple frames or batches of frames can be processed separately and independently. We won’t do a deep dive on Amdahl’s Law4 today, but maybe in the future, would you like me to? The point is that when you are doing 3D modeling, texturing, animation, rigging, and the majority of the tasks that happen on your 3D application when you are not rendering, are massively different in nature and therefore their compute requirements are also very appart. In fact, most of the time you are manipulating vectors (CAD) and interacting with your application, you need very few, really fast cores, so having a top of the line CPU from the newest architecture makes all the difference when you are in front of the system, regardless of rendering time.

Other factors can also play a role depending on scene complexity, like having plenty of RAM available, CPU cache sizes, among others.

Based on the example above, my hot take is that you would be way more productive on a 3D application, using a new Intel Core Ultra or AMD Ryzen CPU than an older and higher core count Intel Xeon W or AMD Threadripper. Having said that, when export operations arrive, the big brother CPUs (Xeon W and Threadripper) will leave Core Ultra and Ryzen in the dust.

If CPUs were vehicles. Intel Core Ultra and AMD Ryzen are sports motorcycles they will always win the quarter mile races. However, when you need to move a Ton of dirt, you will definitely go with a pick-up truck, like Xeon W or Threadripper.

How do we fix this?

1. Be aware and trust your gut and experience

If you are reading this, my guess is that you are an experienced user on at least a handful of professional applications in your industry/workflow. Go try different Workstations, from PC OEM samples or try one from a colleague; run some of the projects that made you shed a tear. If it feels off, it probably is. Keep digging until you see your project running smoothly and if not, identify the bottleneck. Think about where the complexity will increase in future projects and try to simulate that.

I feel this can be yet another topic for the Bin List, let me know if you agree. If you need help on this process, reach out. This should be objective, but there are a lot of educated guesses that will help you along the process.

2. ISVs: Build Better Benchmarks

BBB - trademark pending :-D

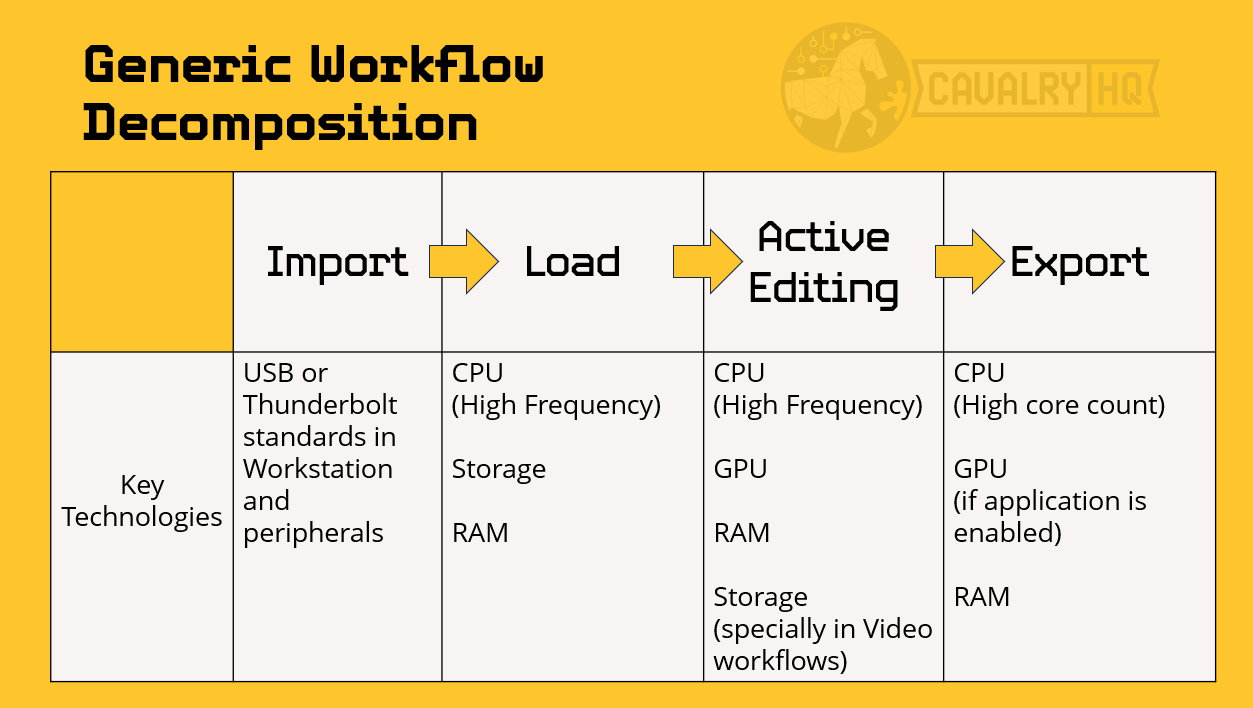

It would be wonderful if Blender Benchmark or Cinebench also had workloads for other part of the application, not only the “Export” operation of Rendering. I like to look at an application as a whole, as flow that a user follows, even if every user can do things in a different way, there are many commonalities. Such flow goes like this:

Import

The process of bringing all the footage/media/assets you need into your Workstation. This phase is more about networking, I/O ports and peripherals than compute

Load

This phase is about opening and bringing your project and dependencies into the application, in complex projects this can take several minutes. This process is often ignored, yet there is a lot that happens in terms of storage, memory sub-system, decompression, etc

Active Editing

This is when you are actually creating! Productivity is defined by you being unleashed on this phase, fast viewports, responsiveness, being able to experiment and never be afraid of “sliding a slider” because your Workstation can handle anything you want to try in the pursuit of quality and excellence!

Export

This is when you pick your settings and typically go away! tests and quick experiments typically allow for a coffee refill and final exports mean progress bars that cook while you sleep or even you submit to a higher compute solution. Interestingly, this is what most benchmarks test!

Example: Epic Games - Unreal Engine

There is no benchmark for Unreal Engine out there. Luckily there are experts that have come to the industry rescue, and have found ways to test Unreal Engine, creating some workloads, typically such workloads are focused around:

Compiling Unreal Engine from source code. This is a great workload for programmers, not artists

Light Baking / Build Lights

Compile Shaders

All this a good, but what about really important and modern features like:

Lumen performance

Nanite performance

Loading time

Viewport performance / preview FPS

Memory usage by tile on World Partition

Metahuman Creator

Blueprint compiling performance

… to name a few.

Hope: There are great benchmarks out there!

There are people, companies and organizations that “Get it” and are developing great Workstation benchmarks and the best news is that YOU can help them with your expertise!

SPEC Graphics & Workstation Performance Group (GWPG)5

This Committee has amazing benchmarks with complementary focuses:

Graphics: SPECviewperf

Focused on the Graphics hardware for professional applications, this benchmark includes workloads for popular titles like 3ds Max, Catia, Creo, Maya, Solidworks and yes, there are new ones we needed, like Unreal Engine for Lumen, Nanite and Temporal Super Resolution. For a full list and details, visit their website (listed on References)

System: SPECworkstation

This benchmark shows you individual scores per industry:

AI & Machine Learning

Energy

Financial Services

Life Sciences

Media & Entertainment

Product Design

Productivity & Development

Independently testing the major Hardware Subsystems:

Accelerator

CPU

Graphics

Storage

Application: SPECapc

There are multiple benchmarks, one for each application, including (individually): Siemens NX, Solidworks, Maya, Creo, 3ds Max

If your goal is to deeply understand one of these apps, this is the way to go

Puget Systems - Pugetbench6

From the PC OEMs, Puget Systems is the one that took the “So What?” question more seriously, dedicating resources to develop and maintain benchmarks. This is not an easy task and in my honest opinion, this is a very smart investment since their objective recommendation has become a tool for Influencers and Reviewers too, giving them big visibility that shows their leadership and they can translate into sales.

Pugetbench currently covers:

Adobe Premier Pro

Adobe After Effects

Adobe Photoshop

Adobe Lightroom Classic

Blackmagic Design - Davinci Resolve

Puget has a developer program to encourage experts like you to contribute knowledge, expertise and ultimately useful workloads! Please join them, your contributions have industry-wide impact. https://www.pugetsystems.com/pugetbench/development-program/

Conclusions

It’s important to make informed and objective (data-driven) decisions when purchasing Workstations for Professional Applications and Workflows. Having said that, a simple chart from an easy to run benchmark is not enough; think about what your workflow and identify the phases where you need to increase productivity

I celebrate the fact that there is little coverage overlap between SPEC benchmarks and Pugetbench, giving the industry a broader scope by complementing each other. At the end of the day, we are on this together and it makes no sense to compete in a space as specialized as this

It’s critical that YOU, as an expert in your industry, contribute to the SPEC Committee7 and/or the Pugetbench Developer Program8 . This is particularly important if your expertise is on an application not covered, yet! (by “yet”, I mean: “until you help them”)

Do you need help selecting the right Workstation for your Workflow or Professional Application? Ping me, I’m happy to help.

We need your help

In order to grow our mission to empower users with great tech, we need your support. These are a few things you can do to support us:

If you haven’t already, please Subscribe to this newsletter, there is a free option available or you can support this project with a paid option

Like this article,

Repost it if you think it will help others

Share this article with 3 people you think will find it valuable

About the Author

This article was written by Hernán Quijano, Workstation Performance and Market Analyst at CavalryHQ. Our mission is to bridge the workstation industry and power users; improving guidance and removing technical roadblocks, so users unleash their talents focusing on accelerating software applications and workflows in Engineering, Media & Entertainment and A.I.

Disclaimers

Unless explicitly stated, this article has not been sponsored by any brand or organization | The author might personally own stock in one or multiple of the mentioned companies.

References:

https://www.maxon.net/en/cinebench

https://opendata.blender.org/

https://en.wikipedia.org/wiki/Embarrassingly_parallel

https://en.wikipedia.org/wiki/Amdahl%27s_law

https://gwpg.spec.org/

https://www.pugetsystems.com/pugetbench/

https://gwpg.spec.org/participation/

https://www.pugetsystems.com/pugetbench/development-program/